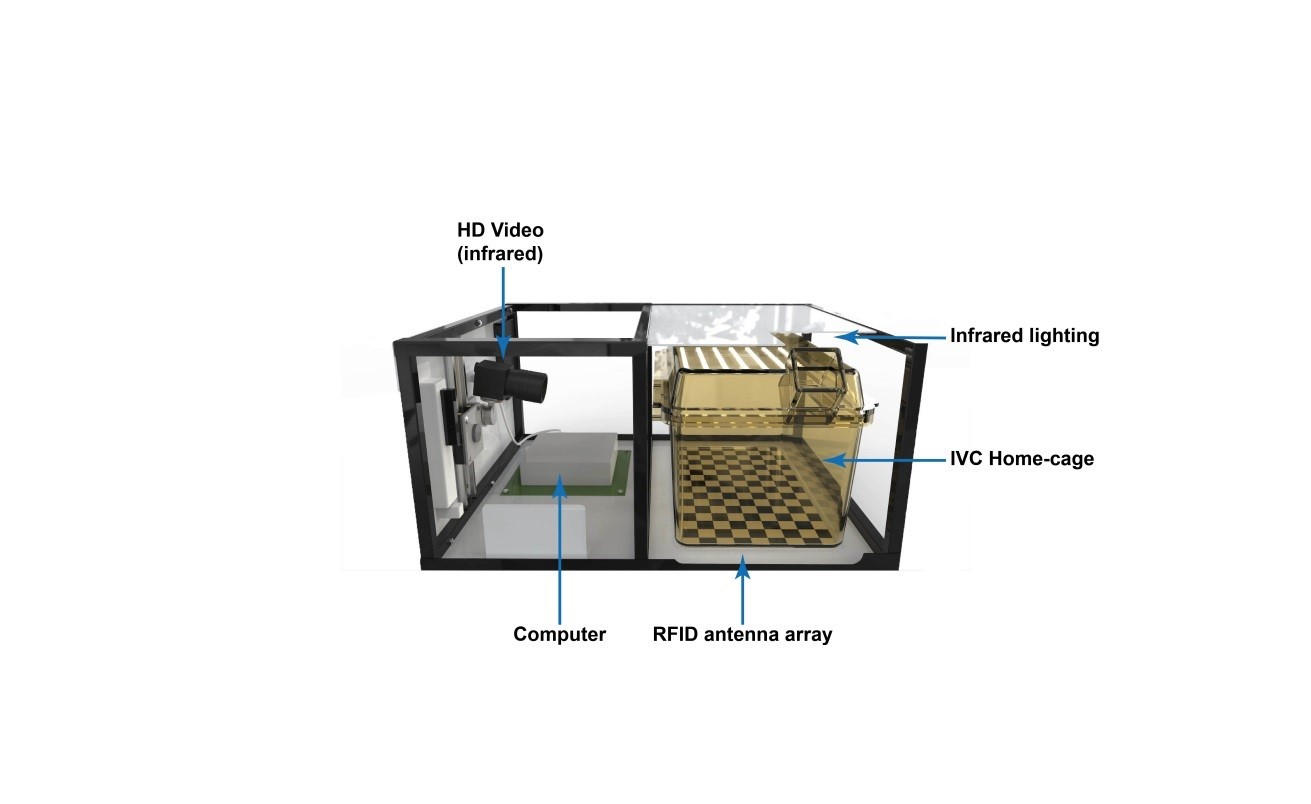

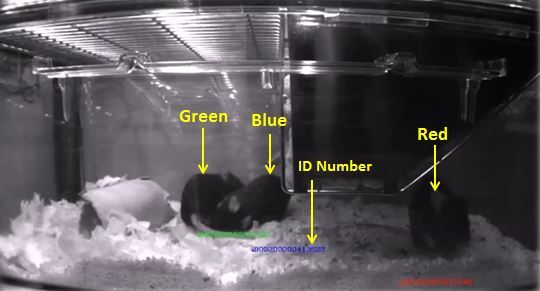

Machine learning has revolutionised the analysis of large datasets in recent years. It uses automated recurring pattern recognition, known as unsupervised learning, or initial manual annotation, known as supervised learning, which teaches the computers how to do the analysis in the future. The Mary Lyon Centre at MRC Harwell (MLC) has collected large sets of video data generated by its Home Cage Monitoring platform; these data required supervised learning. Manual annotation was, however, a challenge due to the size of the dataset and the human resources required. In July 2018, the MLC and the National Centre for Replacement, Reduction and Refinement (NC3Rs) launched a citizen science project, Rodent Little Brother: Secret Lives of Mice, on the Zooniverse platform. In a first-of-its-kind citizen science project, volunteers annotated mouse behaviours from video clips to support the study. The project aimed to engage the public in generating an annotated dataset with which to train a machine-learning algorithm which would eventually be used to automatically identify and annotate the mouse activities without human intervention.

Through this project, the MLC scientists have not only harnessed the power of citizen science to generate some scientifically useful data but also opened a dialogue with audiences, globally, about the nature and the need for laboratory animal science.

What we learned

We can distinguish the outcomes of this work into two main categories that reflect the scope and ambition of this pioneering citizen science project and from which we are able to learn and inform our choices for future iterations or similar scientific programmes.

The first category is strictly related to the scientific data outcomes:

- Human Intelligence Tasks, the annotations used to train algorithms for detection and action recognition in videos, are limited by a high occurrence of labelling errors. To minimise such errors, we opted for a majority vote method. This is where multiple individuals label the same clip, and the majority vote is used to achieve consensus.

- Once every clip was labelled by 20 different individuals, we collected the data and sent it to our collaborators at the University of Edinburgh, to support a master’s research project, the aim of which was to QC the data and create a dataset that would be adequate for the supervised machine-learning phase of the project.

- The results of this master’s project were very interesting to us. We discovered that there is an upper limit to this majority vote system to improve efficiency. Having 20 people with limited training to label one clip resulted in the vote being spread too widely to achieve consensus. This work is published on the webpage of our collaborators at the University of Edinburgh.

- From this work, we concluded that, when using datasets in which the potential for ambiguity is high, such as action-oriented tasks in the current project, it is preferable to use a limited number of trained experts to carry out the manual labelling tasks.

- We have now implemented this method in most of our Human Intelligence Tasks, particularly those that will result in supervised machine-learning datasets.

The second category lists outcomes that are related to public involvement in the project, detailing how this type of open science platform can lead to a level of engagement that is unattainable with standard techniques and can lead to interesting conversations and debates:

- More than 4,000 volunteers registered to participate in this project, logging 211,000 labelling events since its launch in August 2019.

- The project page has been visited 19,771 times by 15,554 unique participants from many regions of the world. China, Russia, India, Brazil and Guatemala are just examples that highlight the global reach of the project.

- A total of 4,775 dedicated volunteers have generated over 110 pages of comments, discussions and questions for the research team.

- The funding provided by the MRC helped to disseminate knowledge of the project to local schools, as well as to career and outreach events at national and international conferences. We have received a significant number of requests from school- and college-aged volunteers to be included in the validation work. The number of requests reflects the wide demographic range of our participants, from school-aged children to retired individuals, who donated their time to make a contribution to science.

- In this guest blog post, Emma Robinson, who was a volunteer on the project and is starting a degree in psychology this Autumn, shares her perspective on this exciting initiative.

- The project was the recipient of the Understanding Animal Research (UAR) Openness Award 2020 in recognition of the “reach and innovation of the project, which engaged many members of the lay public in rodent welfare research, supporting both openness and the humane use of animals in research. This is a huge piece of work which has involved a great many people in animal research, allowing them to see the realities of research for themselves.”